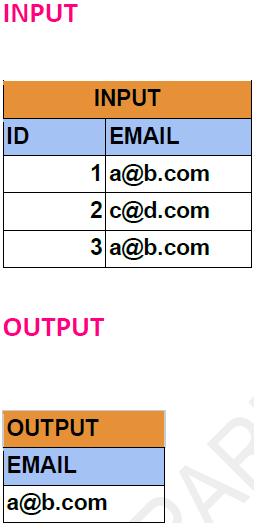

Problem Statement: -

Report all the duplicate emails. Note that it's guaranteed that the email field is not NULL.

Dataframe API Solution: -

from pyspark.sql.types import *

from pyspark.sql.functions import *

from pyspark.sql.window import *

# Define the schema for the "emails" table

emails_schema = StructType([ StructField("id", IntegerType(), True), StructField("email", StringType(), True) ])

# Define data for the "emails" table

emails_data = [ (1, 'a@b.com'), (2, 'c@d.com'), (3, 'a@b.com') ]

email_df = spark.createDataFrame(emails_data,emails_schema)

email_df.show()

+---+-------+

| id| email|

+---+-------+

| 1|a@b.com|

| 2|c@d.com|

| 3|a@b.com|

+---+-------+

df_grouped = email_df.groupBy("email").count().filter(col("count") > 1)

df_grouped.show()

+-------+-----+

| email|count|

+-------+-----+

|a@b.com| 2|

+-------+-----+

Spark SQL Solution: -

email_df.createOrReplaceTempView("Mails")

result_df = spark.sql("""

SELECT

email,

COUNT(*) AS Count

FROM Mails

GROUP BY Email

HAVING Count>1

""")

result_df.show()

+-------+-----+

| email|Count|

+-------+-----+

|a@b.com| 2|

+-------+-----+