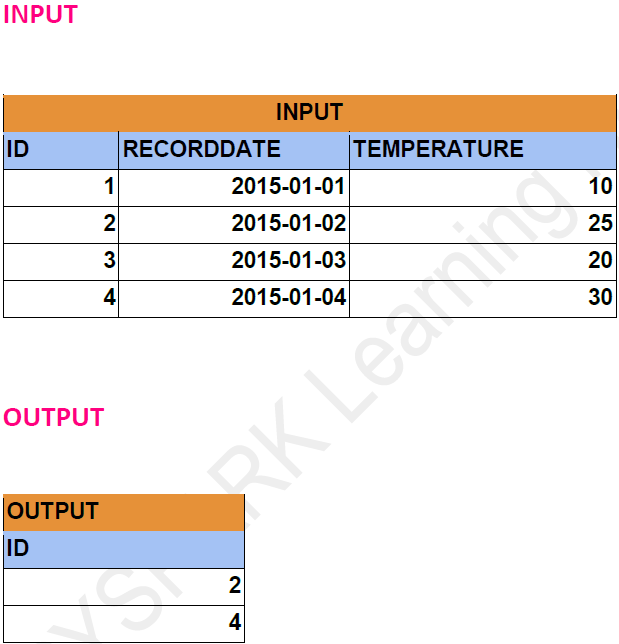

Problem Statement: -

Find all dates' Id with higher temperatures compared to its previous dates (yesterday).

Return the result table in any order.

Dataframe API Solution: -

from pyspark.sql.types import *

from pyspark.sql.functions import *

from pyspark.sql.window import *

# Define the schema for the "Weather" table

weather_schema = StructType([ StructField("id", IntegerType(), True), StructField("recordDate", StringType(), True), StructField("temperature", IntegerType(), True) ])

# Define data for the "Weather" table

weather_data = [ (1, '2015-01-01', 10), (2, '2015-01-02', 25), (3, '2015-01-03', 20), (4, '2015-01-04', 30) ]

w_df = spark.createDataFrame(weather_data,weather_schema)

w_df = w_df.withColumn("recordDate",to_date("recordDate","yyyy-MM-dd"))

w_df.show()

+---+----------+-----------+

| id|recordDate|temperature|

+---+----------+-----------+

| 1|2015-01-01| 10|

| 2|2015-01-02| 25|

| 3|2015-01-03| 20|

| 4|2015-01-04| 30|

+---+----------+-----------+

Window_spec = Window.orderBy(col("recordDate").asc())

lag_wthr_df = wthr_df.withColumn("Prev_Day_temp",lag(col("temperature")).over(Window_spec))

lag_wthr_df.show()

+---+----------+-----------+-------------+

| id|recordDate|temperature|Prev_Day_temp|

+---+----------+-----------+-------------+

| 1|2015-01-01| 10| NULL|

| 2|2015-01-02| 25| 10|

| 3|2015-01-03| 20| 25|

| 4|2015-01-04| 30| 20|

+---+----------+-----------+-------------+

result_df = lag_wthr_df.filter(col("temperature") > col("Prev_Day_temp")).select("id")

result_df.show()

+---+

| id|

+---+

| 2|

| 4|

+---+

Spark SQL Solution: -

wthr_df.createOrReplaceTempView("tmpr")

result_df = spark.sql("""

WITH CTE AS(

SELECT

id,

temperature,

lag(temperature) OVER(ORDER BY recorddate) AS Prev_day_temp

FROM tmpr

)

SELECT id

FROM CTE

WHERE temperature > Prev_day_temp

""")

result_df.show()